You can learn how to copy data of CSV files into SQL Database with Azure Data Factory following this post. Please note, you must install SSMS from Download SQL Server Management Studio (SSMS) before following this post.

After setting up SSMS, follow below step to copy data of CSV files into SQL Database.

- Upload CSV files into Azure Storage

- Create a table in your SQL Database

- Copy CSV files into your SQL Database with Azure Data Factory

After downloading "USDJPY.csv" file from http://www.m2j.co.jp/market/historical.php, upload the file into your Azure Storage.

Create a table in your SQL Database

Setup your SQL Database instance if you don't have it. After creating the instance, setup firewall following Azure SQL Database server-level and database-level firewall rules to access with "sqlcmd" command from your computer.

Execute below command from your client computer. And note to execute below with one liner, because I just add line breaks to read easily.

normalian> sqlcmd.exe -S "server name".database.windows.net -d "database name" -U "username"@"server name" -P "password" -I -Q

"CREATE TABLE [dbo].[USDJPY]

(

[ID] INT NOT NULL PRIMARY KEY IDENTITY(1,1),

[DATE] DATETIME NOT NULL,

[OPEN] FLOAT NOT NULL,

[HIGH] FLOAT NOT NULL,

[LOW] FLOAT NOT NULL,

[CLOSE] FLOAT NOT NULL

)"You can remove the table using below command if you mistake something for this setting.

normalian> sqlcmd.exe -S "server name".database.windows.net -d "database name" -U "username"@"server name" -P "password" -I -Q "DROP TABLE [dbo].[USDJPY]"

Copy CSV files into your SQL Database with Azure Data Factory

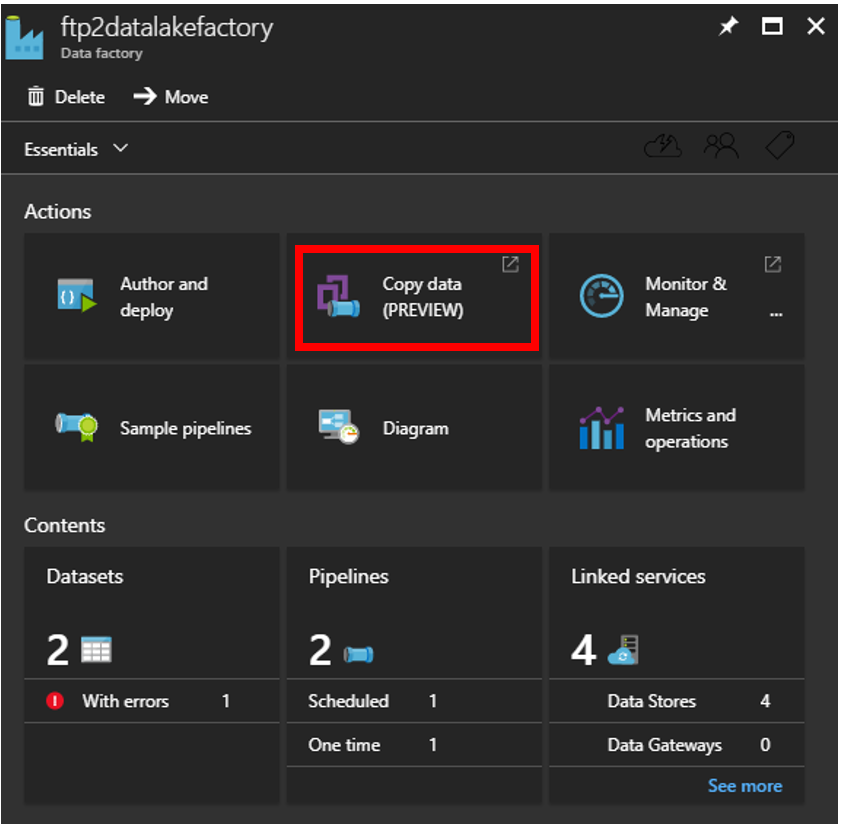

At first, create your Azure Data Factory instance. And choose "Copy data(PREVIEW)" button like below.

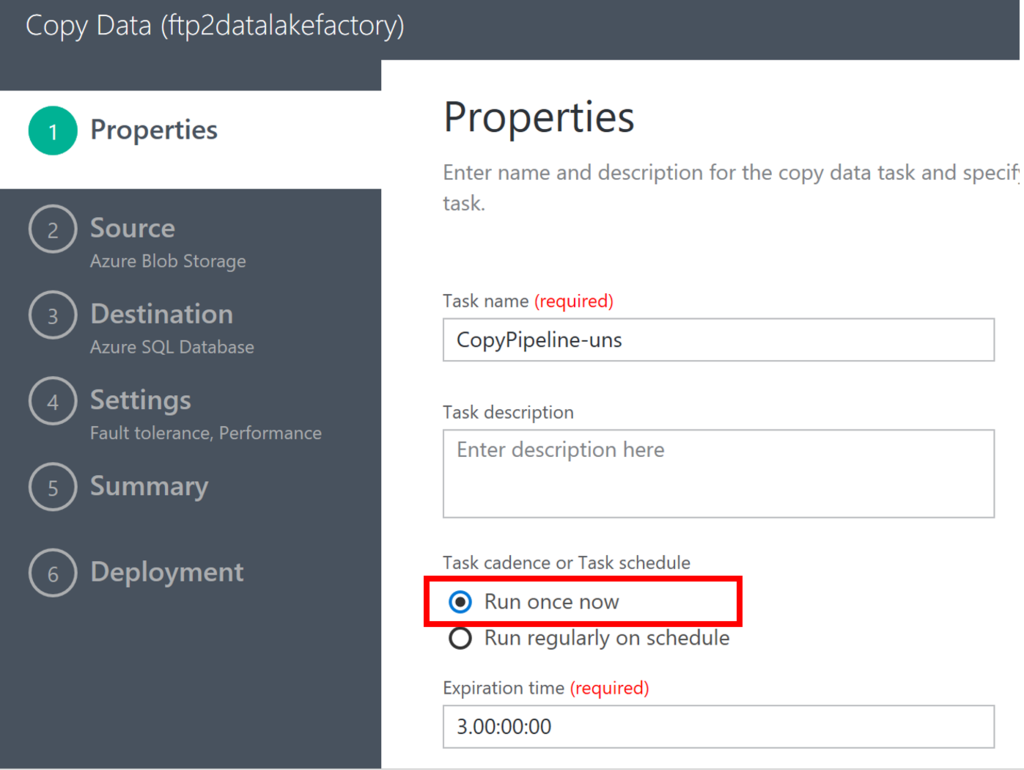

Next, choose "Run once now" to copy your CSV files.

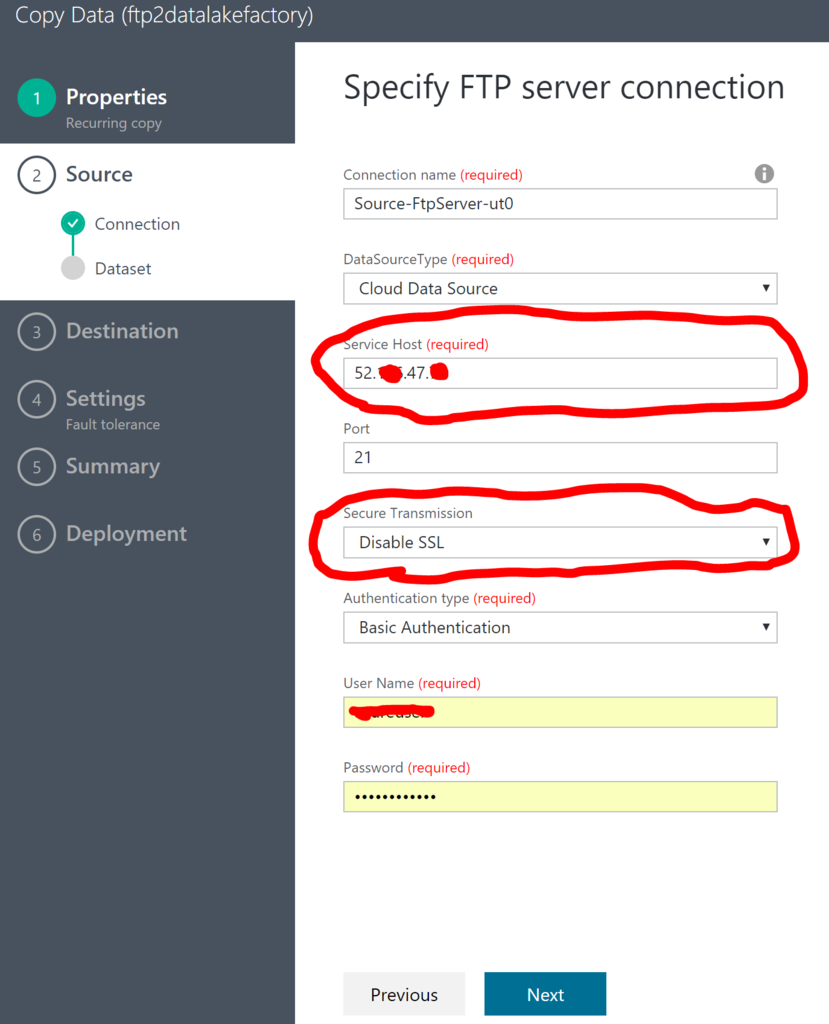

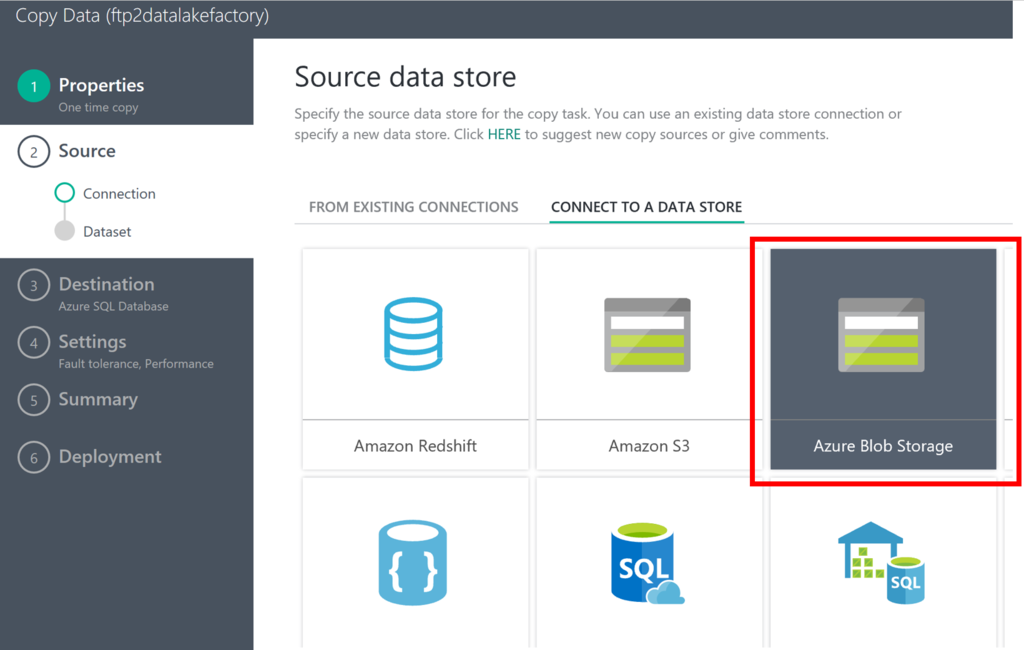

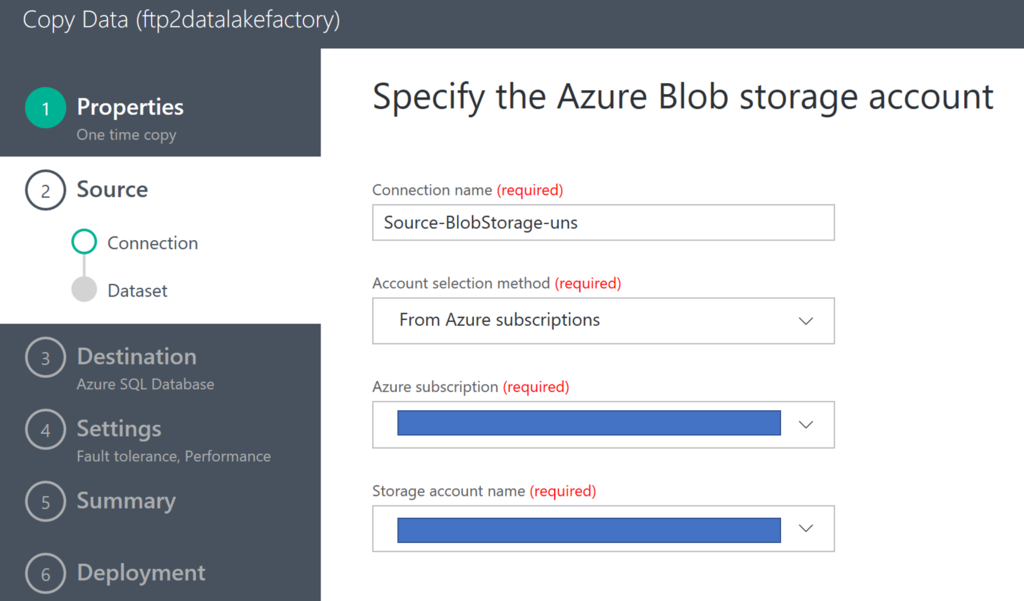

Choose "Azure Blob Storage" as your "source data store", specify your Azure Storage which you stored CSV files.

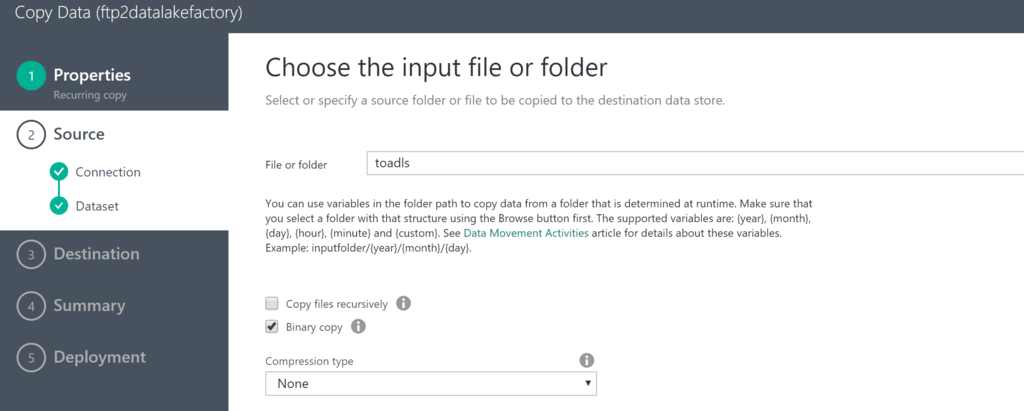

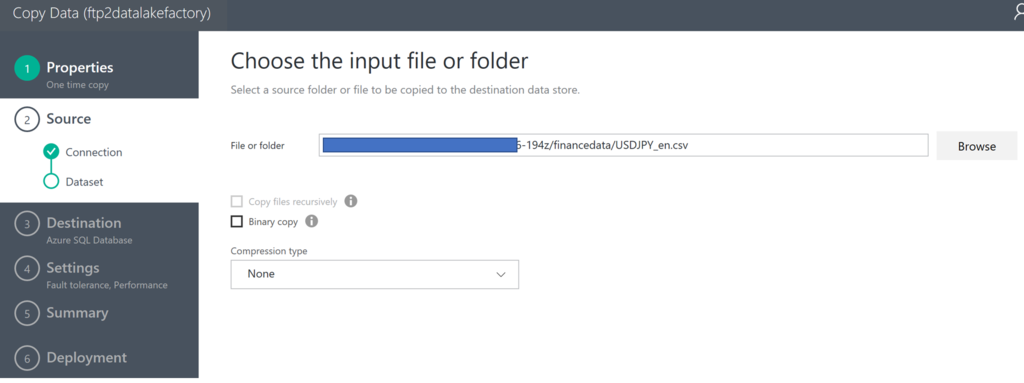

Choose your CSV files from your Azure Storage.

Choose "Comma" as your CSV files delimiter and input "Skip line count" number if your CSV file has headers.

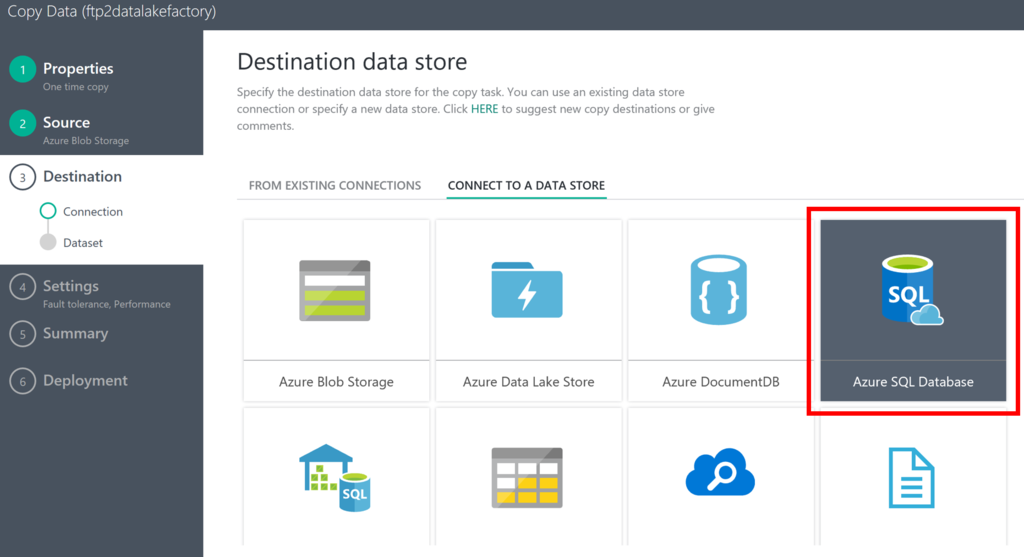

Choose "Azure SQL Database" as your "destination data store".

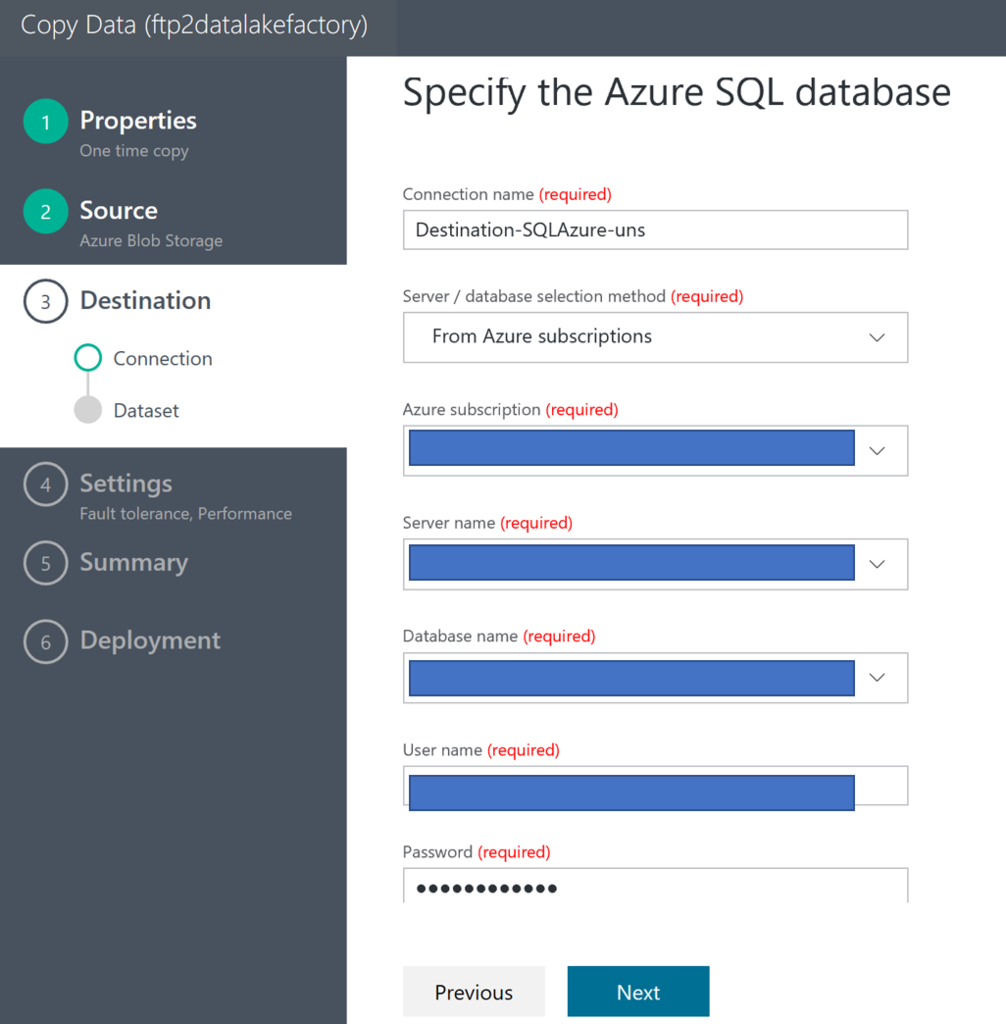

Input your "Azure SQL Database" info to specify your instance.

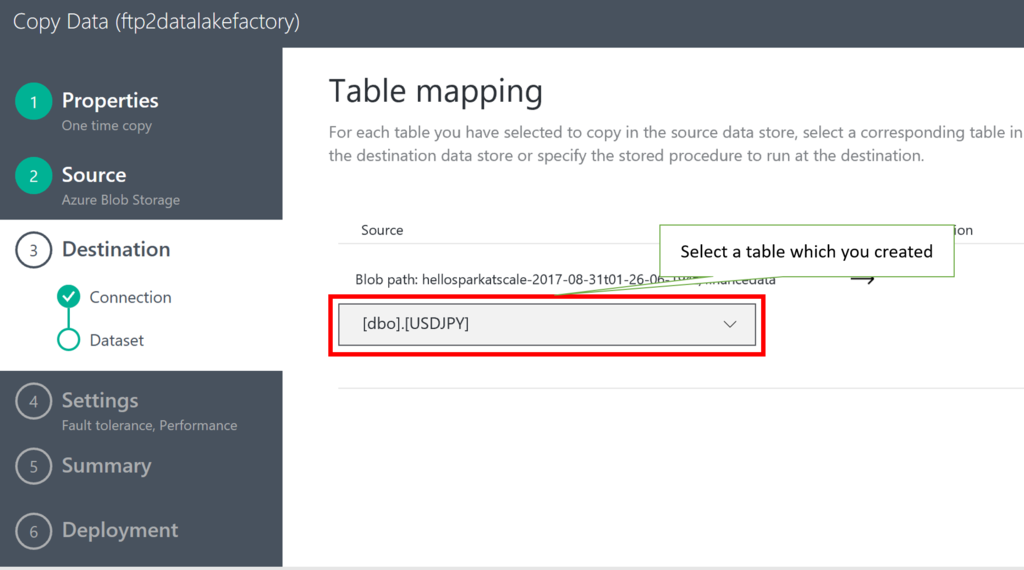

Select your table from your SQL Database instance.

Check your data mapping.

Execute data copy from CSV files to SQL Database just confirming next wizards.

After completing this pipeline, execute below command in your machine. You can get data from SQL Database.

normalian> sqlcmd.exe -S "server name".database.windows.net -d "database name" -U "username"@"server name" -P "password" -I -Q "SELECT * FROM [dbo].[USDJPY] ORDER BY 1;"

You can get some data from SQL Database if you have setup correctly.