Data copy from FTP server to SQL Data Lake using Azure Data Factory

You can achieve to setup data copy scenarios with Azure Data Factory from your FTP server to your SQL Data Lake by following this article. I believe this scenario is quite simple, but you can avoid to be confused by utilizing this article.

How to setup FTP server on Microsoft Azure

Create a Linux, CentOS7, virtual machine at first. After that, connect the VM with ssh, and run below commands.

[root@ftpsourcevm ~]# sudo su - [root@ftpsourcevm ~]# yum -y update && yum -y install vsftpd

Please setup this vsftp server as passive mode with below sample. As far as I have confirmed, Azure Data Factory supports only passive mode ftp servers.

[root@ftpsourcevm ~]# vi /etc/vsftpd/vsftpd.conf # When "listen" directive is enabled, vsftpd runs in standalone mode and # listens on IPv4 sockets. This directive cannot be used in conjunction # with the listen_ipv6 directive. listen=YES # # This directive enables listening on IPv6 sockets. By default, listening # on the IPv6 "any" address (::) will accept connections from both IPv6 # and IPv4 clients. It is not necessary to listen on *both* IPv4 and IPv6 # sockets. If you want that (perhaps because you want to listen on specific # addresses) then you must run two copies of vsftpd with two configuration # files. # Make sure, that one of the listen options is commented !! listen_ipv6=NO pam_service_name=vsftpd userlist_enable=YES tcp_wrappers=YES pasv_enable=YES pasv_addr_resolve=YES pasv_min_port=60001 ( you need add this port to this VM NSG setup pasv_max_port=60010 ( you need add this port to this VM NSG setup pasv_address=(update global ip address of your ftp server vm e.g. 52.1xx.47.xx)

Run below commands to reflect your config change.

[root@ftpsourcevm ~]# systemctl restart vsftpd [root@ftpsourcevm ~]# systemctl enable vsftpd

Finally, you need to add allow port configuration between pasv_min_port and pasv_max_port into NSG. Please refer below image.

How to setup Azure Data Lake for Azure Data Factory

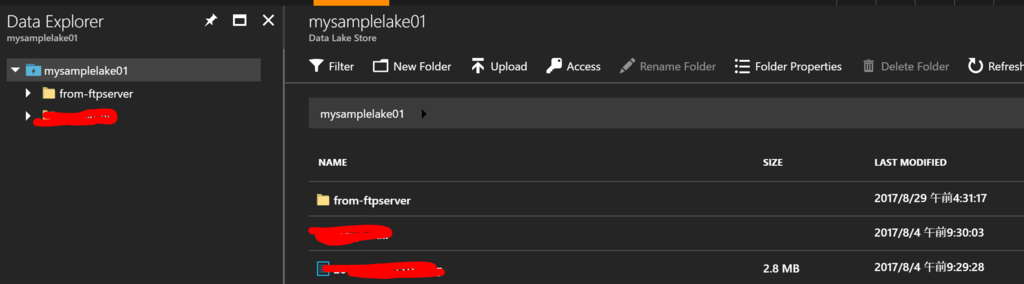

Just create your Azure Data Lake instance, and add a directory for Azure Data Factory like below.

How to setup Azure Data Factory to copy from your FTP server to your Azure Data Lake

After creating your Azure Data Factory instance, choose "Copy data (PREVIEW)" to setup this.

Change this schedule period if it's needed.

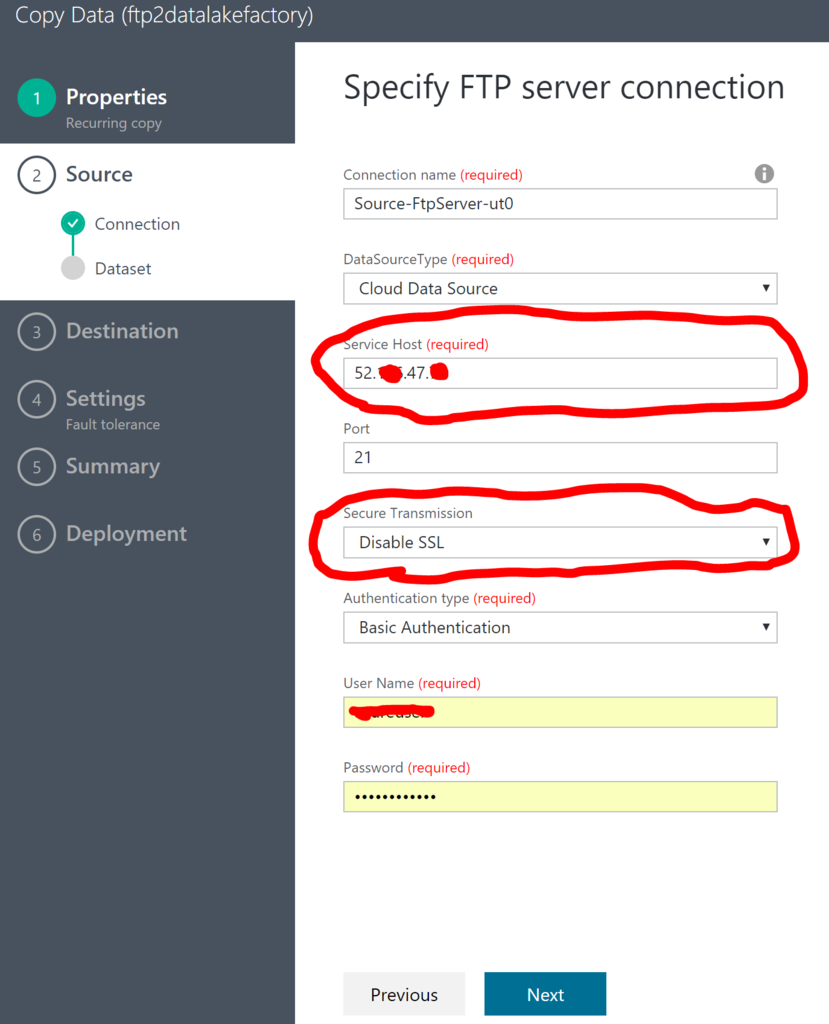

Choose "FTP" as "CONNECT TO A DATA SOURCE", but you can also choose other data sources such like S3 and other cloud data sources.

Change to "Disable SSL" at "Secure Transmission" in this sample, and please setup SSL when you will deploy this pipeline in your production environments. Input a global in address of your ftp server and credential account info of your ftp server. You will get a connection error if you setup active mode FTP servers.

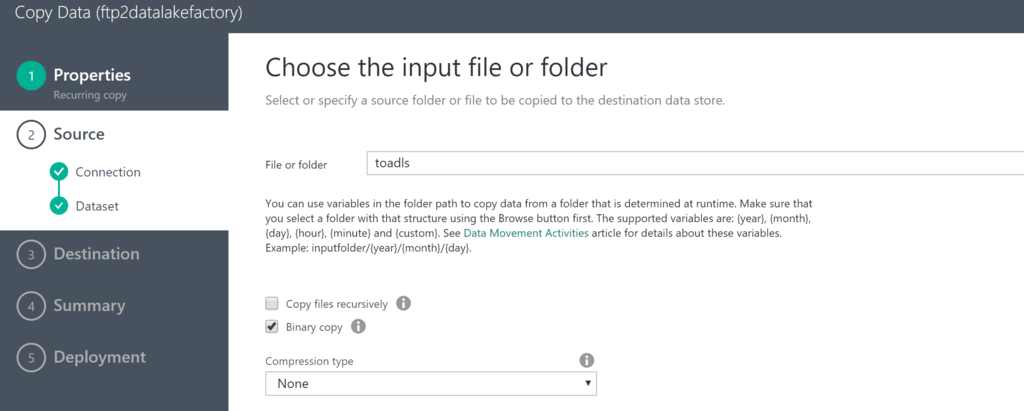

Choose a folder for data source of Azure Data Factory. In this sample, we setup as binary copy mode. But you can setup other data copy types such like cvs and others.

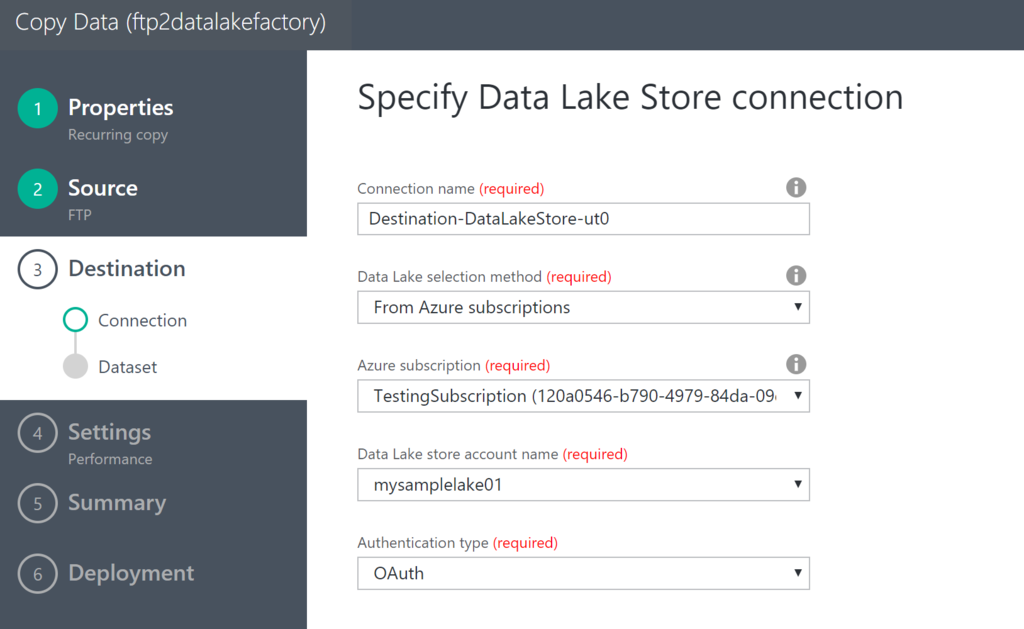

Choose "Azure Data Lake Store" as "CONNECT TO A DATA STORE" in this article.

Choose your Azure Data Lake Store instance for storing data like below.

Choose a folder for data storing destination.

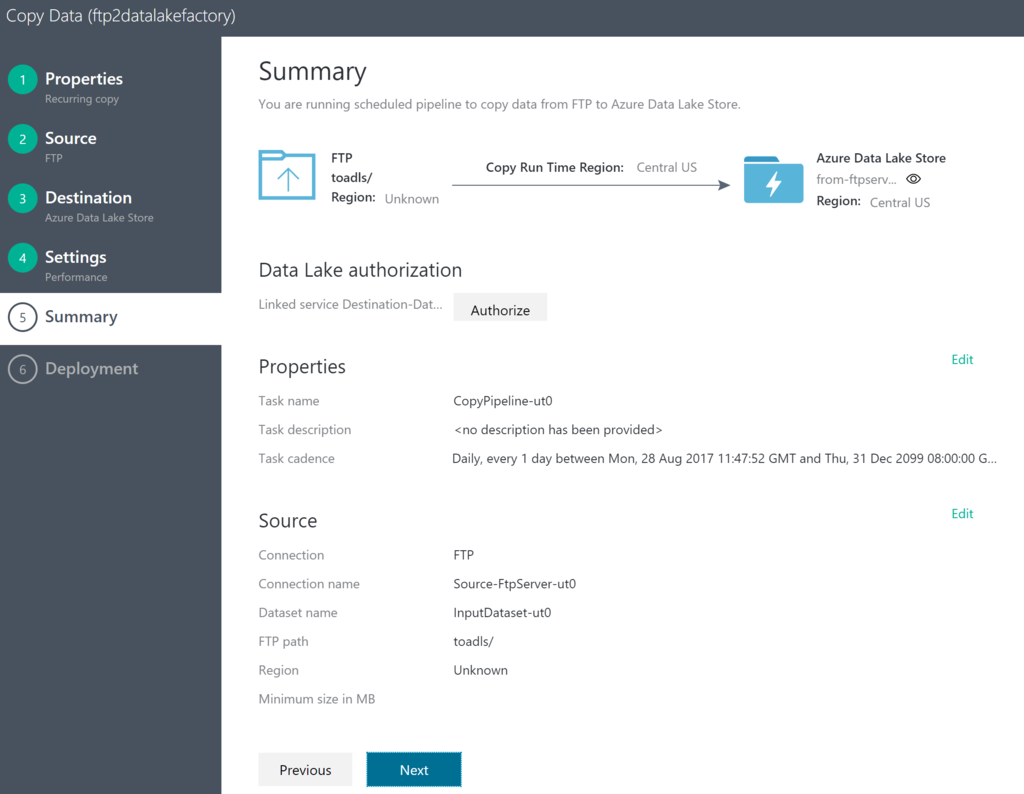

Confirm your setup info, and submit to deploy this pipeline.

Confirm your setup

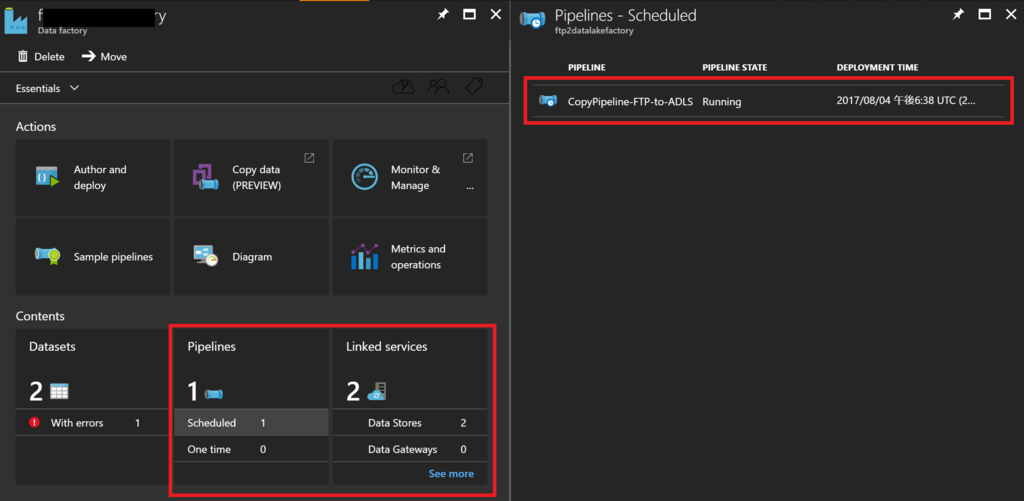

You can view your data copy pipeline in your Azure Data Factory like below. Azure Data Factory will copy your data on your FTP server into your Azure Data Lake following your schedule.

Reference

- https://www.liquidweb.com/kb/how-to-install-and-configure-vsftpd-on-centos-7/

- http://www.itzgeek.com/how-tos/linux/centos-how-tos/enable-passive-mode-in-ftp-on-centos-7-rhel-7-for-filezilla-and-winscp.html

- http://qiita.com/dogyear/items/289b314db9af42e15cfa

- http://dev.classmethod.jp/cloud/aws/to-be-aware-of-when-settingup-ftpserver-with-aws/

- Switch "Secure Transmission" to "Disable SSL" https://docs.microsoft.com/en-us/azure/data-factory/data-factory-scheduling-and-execution

- https://docs.microsoft.com/en-us/azure/data-factory/data-factory-ftp-connector