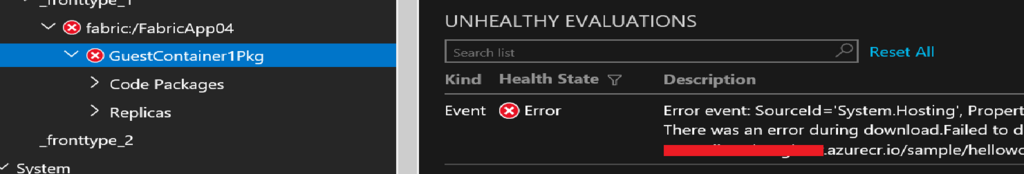

As you know, Service Fabric is one of implementations to offer Microservice Architecture provided by Microsoft. Of course, it can be deployed Docker Images both Windows and Linux base images, but you should note "Operating System" of Service Fabric cluster matches with Docker images when you want to deploy Docker images. There might be some reasons of error messages "There was an error during download.Failed" like below when you got the messages while deploying your images.

It's caused by some reasons and it should be one of below.

- URL of your Docker image is invalid

- The authentication info of your Docker repository account is invalid

- There is virtualization mechanism mismatch between base OS of Docker images and operating system version of your Service Fabric cluster

No.1 and No.2 are trivial and not so difficult to fix it, but it's not easy to clarify when you got the message caused by No.3. In this article, I will dig into cause of No.3.

Docker container base images need to match the version of the host its running on. Unfortunately Windows made a breaking change where container images are not compatible across hosts like below article.

docs.microsoft.com

You need to specify your Service Fabric cluster "Operating System" based on your Docker image base OS like below image.

- You must specify "WindowsServer 2016-Datacenter-with-Containers" as Service Fabric cluster Operation System if your base OS is "Windows Server 2016"

- You must specify "WindowsServerSemiAnnual Datacenter-Core-1709-with-Containers" as Service Fabric cluster Operation System if your base OS is "Windows Server version 1709"

Example to match OS Versions

It's important to match your Service Fabric cluster "Operating System" and base OS version specified in Dockerfile "FROM" keyword. And I put ServiceManifest and ApplicationManifest.xml just in case.

Example - part of Dockerfile

# This base OS for "WindowsServer 2016-Datacenter-with-Containers" #FROM microsoft/aspnetcore-build:2.0.5-2.1.4-nanoserver-sac2016 AS base # This base OS for "WindowsServerSemiAnnual Datacenter-Core-1709-with-Containers" FROM microsoft/aspnetcore:2.0-nanoserver-1709 AS base WORKDIR /app EXPOSE 80 # This base OS for "WindowsServer 2016-Datacenter-with-Containers" #FROM microsoft/aspnetcore-build:2.0.5-2.1.4-nanoserver-sac2016 AS build # This base OS for "WindowsServerSemiAnnual Datacenter-Core-1709-with-Containers" FROM microsoft/aspnetcore-build:2.0-nanoserver-1709 AS build WORKDIR /src COPY *.sln ./ COPY NetCoreWebApp/NetCoreWebApp.csproj NetCoreWebApp/ RUN dotnet restore COPY . . WORKDIR /src/NetCoreWebApp RUN dotnet build -c Release -o /app FROM build AS publish RUN dotnet publish -c Release -o /app FROM base AS final WORKDIR /app COPY --from=publish /app . ENTRYPOINT ["dotnet", "NetCoreWebApp.dll"]

Example - part of ApplicationManifest.xml

<ServiceManifestImport> <ServiceManifestRef ServiceManifestName="GuestContainer1Pkg" ServiceManifestVersion="1.0.0" /> <ConfigOverrides /> <Policies> <ContainerHostPolicies CodePackageRef="Code"> <RepositoryCredentials AccountName="Username of your Container registry" Password="password of your Container registry" PasswordEncrypted="false"/> <PortBinding ContainerPort="80" EndpointRef="GuestContainer1TypeEndpoint"/> </ContainerHostPolicies> </Policies> </ServiceManifestImport>

Example - part of ServiceManifest.xml

<EntryPoint> <!-- Follow this link for more information about deploying Windows containers to Service Fabric: https://aka.ms/sfguestcontainers --> <ContainerHost> <ImageName>"Username of your Container registry".azurecr.io/sample/helloworldapp:latest</ImageName> </ContainerHost> </EntryPoint>