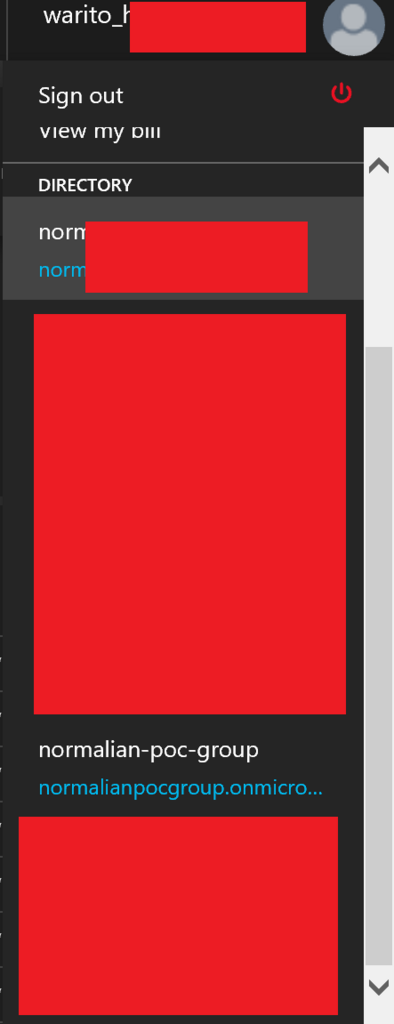

All Azure subscriptions are associated to an Azure AD tenant. As you know, you can use some different Azure AD tenants like below. This sometimes causes some issues, but you can learn how to use these features properly though this post.

Azure AD also manages your "School or Work Account" in your organization. You have to choose account type whether "School or Work Account" or "Microsoft Account"/"Personal Account" when you login Azure. This account types can express simply like below.

- "Microsoft Account" and "Personal Account" are technically same, and they are managed by Microsoft services. They were called "LIVE ID" in past.

- "School or Work Account" is managed by your own Azure AD tenant such like "xxxxx.onmicrosoft.com", and you can assign custom domain name for your tenant as "contoso.com" and others.

As far as I have tried, it's easy to access subscriptions across Azure AD tenants using "Microsoft Account". But almost all companies use "School or Work Account" for governance perspective. Because "Microsoft Account"s are managed by Microsoft, so it's difficult to enable or disable their accounts immediately.

It's needed to invite other Azure AD tenant users into your Azure AD tenant when you want to grant other Azure AD tenant users to access your subscriptions associated with your Azure AD tenant.

How to enable to access subscriptions from other Azure AD tenant users

There are two steps to grant your subscriptions to other Azure AD tenant users.

- Invite the users into your Azure AD tenant

- Assign IAM roles

Invite the users into your Azure AD tenant

Refer Inviting Microsoft Account users to your Azure AD-secured VSTS tenant | siliconvalve or follow below steps.

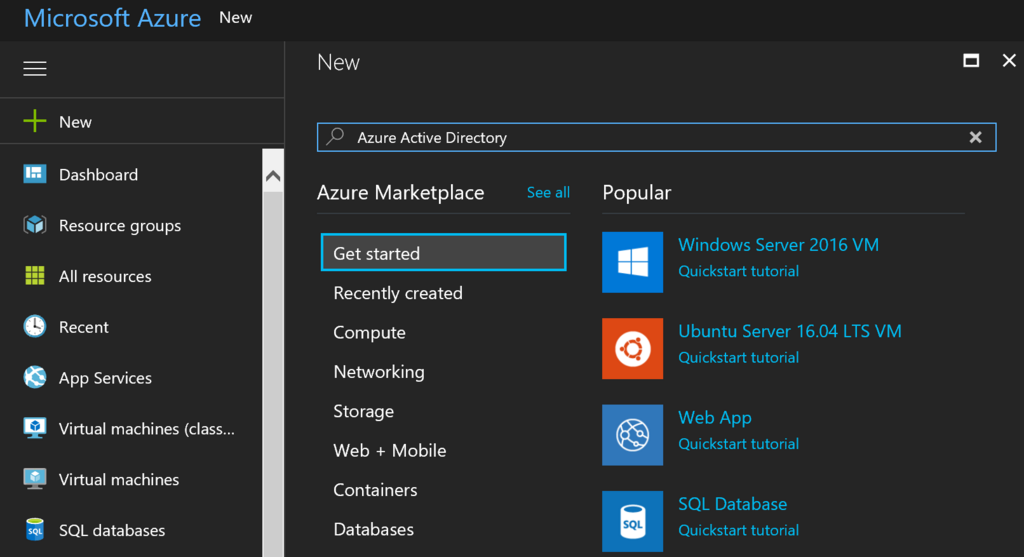

- Login to portal.azure.com

- Login with your Global Admin credentials of your AD tenant

- Go to Azure Active Directory option on the blade

- In the next blade you will find an option of “user setting”

- Under “User setting” kindly check the option “admin and users in guest inviter role can invite”

- The option “admin and users in guest inviter role can invite” should be yes

- After that, go to users and groups in the same blade and click on “all users”

- Under all users, you will see the option “New guest user”

- After clicking on that, you can invite the user of other AD tenants.

- Once the user will accept the invitation, you can give access to the resource under the subscription of your AD tenant.

Assign IAM roles

Refer Add or change Azure admin subscription roles | Microsoft Docs.